I was recently having a look at my GitHub repositories page. I sometimes tidy up my projects as I would do with my living room, trying to understand what a guest would think of me based on what I keep around. It’s interesting to realize how far I’ve gotten with respect to some of my old projects. Still, one of them caught my attention: I hadn’t noticed before, but some users starred it and even forked it over the time.

I don’t mean a lot of people, but I still feel glad thinking that someone used this code despite the fact that I had never advertised it or talked about it, anywhere. Thinking about it, I know programmers who spend weeks at work writing code that is never once used by users. And it’s not like this was my first open source project: I was lucky to be an active and enthusiastic member of the NLTK team, so I have experienced the feeling of contributing to a much larger community (exciting!). Still, these GitHub stars for my little project feel extremely rewarding, probably because I wasn’t expecting them and they come from an old time, before I relocated to another country and kindly nudged my life into new directions.

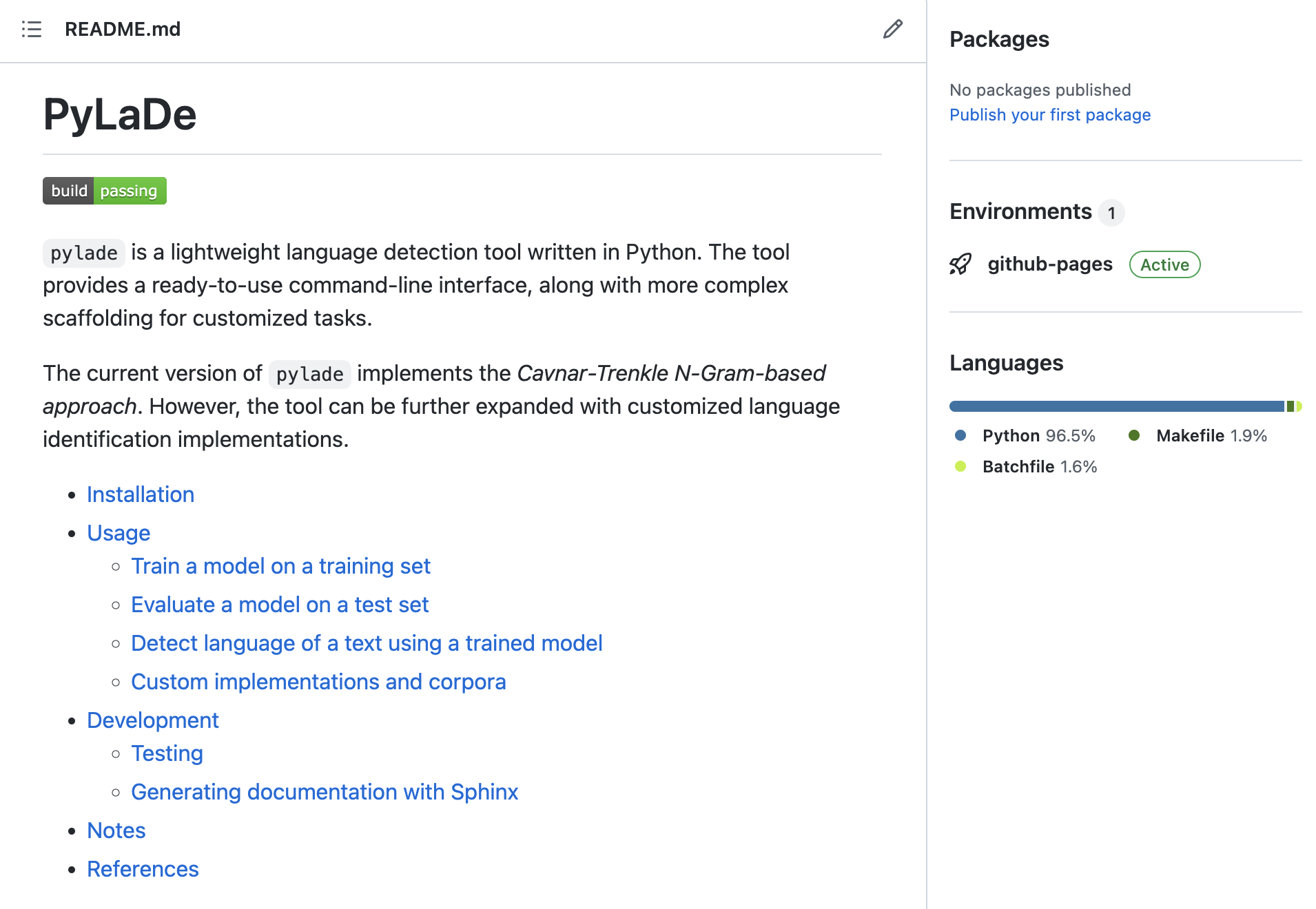

The project is called PyLaDe (Python Language Detection). It’s a simple command-line interface for language detection, with some additional features to train and test custom models. The interesting part (for me) is that I spent quite some time refining it at the time, as I wanted to fiddle a bit with Python packaging and documentation. It looks like someone appreciated the effort, so I decided to update it a little bit and bring it up-to-speed with today’s practices.

The first thing to do was to remove support for old Python versions and add support for new ones. I expanded Tox tests to run against the latest versions, and at this point I realized that the project’s dependencies were outdated. Rather than just modifying the requirements.txt file, I decided to use Poetry and modernize part of the stack.

Adding Poetry and its pyproject.toml configuration meant getting rid of the old setup.py. This has been interesting, since it allowed me to also get an historical look over decades of Python’s best practices and community questions. Besides enjoying this little paleo-programming research, I got to appreciate the elegance of Poetry as a new-ish tool, as well as the simplicity of the good ol’ ways.

After these changes, it was time to brush up on Semantic Versioning and finally add tags for the project’s releases. One of the biggest complaints I had about the old version of the project is that it could only be installed locally, after cloning the repository from GitHub. That’s why I decided to go full-in this time and upload PyLaDe on PyPi: the installation is now as easy as it gets:

$ pip install pylade

I bumped some minor versions along the way and enjoyed seeing my creature evolve.

One unexpectedly tricky part was finding a good way to automatically generate documentation using Sphinx and upload it to GitHub Pages. As odd as it sounds, Sphinx doesn’t yet provide any straightforward way to build and publish on GitHub Pages (see this issue). I could find several GitHub actions that offer a similar workflow, but they always feel like an overkill. In the end I decided to just add a few lines to the Sphinx Makefile to build the docs and move them to the docs/ folder (the one used by GitHub Pages) in the gh-pages branch.

The project is far from being “optimal” and it’s mostly intended for study purposes. It’s also quite fun to think about it today, in the era of Large Language Models and their capabilities. Still, it provided me with some weekend fun and learning. In case someone else enjoys it and wants to use it to study the Cavnar-Trenkle N-Gram-based approach for text categorization, feel free to star the project: I’ll be grateful.